SKIP TO

One of the holy-grails of website animations is a scroll-tied full motion animation such as seen on Apple's illustrious promo pages for their flagship products. If Apple can do it, surely its easy right? Unfortunately its not quite so simple.

Executing these animations successfully require a deep level of collaboration between engineers and designers in the process of producing and implementing similar industry leading scroll-tied animations.

Standard Approaches

Over the years, many developers have attempted to solve this problem in different ways and from that effort a number of commonly known and recommended approaches emerged, each having its own significant downsides in practice. Some common approaches include:

Video with currentTime manipulation

Pros: simple to implement, leans fully on the render quality and the level of compression in the file.

Cons: lags behind on scroll (as frame sync is not a tight guarantee), encodes with more i-frames (what allows you to seek effectively or the "solid" frames that are the basis for the encoding diff) are heavy.

Traditional Image Sequence (jpg or png, css or canvas based)

Pros: tightly tied to user interaction (scrolling, panning, accelerometer movement etc.) as we directly control the frame render loop.

Cons: Upper limit on asset resolution due to heavier page weight (depends on resolution and frames needed), lots of assets to manage, no transparency or blending ability without further increasing image weight.

Sprite Sheet Sequence:

Pros: Same as Image sequences + Fast to load(less images)

Cons: Browsers have hard upper limits on asset resolution (as everything goes into one big image)

In the space of blockbuster scroll-tied animations, Apple has long been considered to be the leader, pushing and iterating on their approach since the launch of the Mac Pro back in 2014. After some initial research, it became clear that some time in 2018 a new approach was implemented that requires extensive research to fully understand. Apple seems to call this technology Flow, and it has enabled Apple to use these high-polish animations more liberally across its product promo pages.

Modern Apple product promo pages like that of the iPad Air and AirPod Pro are for the most part independent codebases: this minimal approach is more efficient with their page-weight to begin with, sharing only small bits of global and local navigation, as well as footer code across these. When these image sequences come into play they end up representing a large percentage of this overall page weight, so it was clearly in Apple’s interest to invest in the research required to develop an approach like Flow to only ship the minimum pixels/kilobytes possible to achieve a smooth animation.

Understanding Flow

The EFF maintains coders like myself's Right to reverse-engineer and I believe my analysis of Flow falls under these guidelines.

In my analysis of the Flow client libraries Apple uses, I have found that they are using a custom, lossless intra-frame diff based sequence compression algorithm to prepare assets and reconstruct them on the fly, in the browser upon page load. This significantly reduces the weight of a full image sequence, and has helped them use more and longer sequences since their first foray with the Mac Pro site 10 years ago. These engaging animations have been the leading standard during this time, and the proper execution has been part of Apple's marketing moat.

Sample files

I don't own the rights to these files so I will link out to Apple's hosted versions:

Example keyframe: Link

Example diff frame: Link

Example flow manifest: Link

Example mask manifest: Link

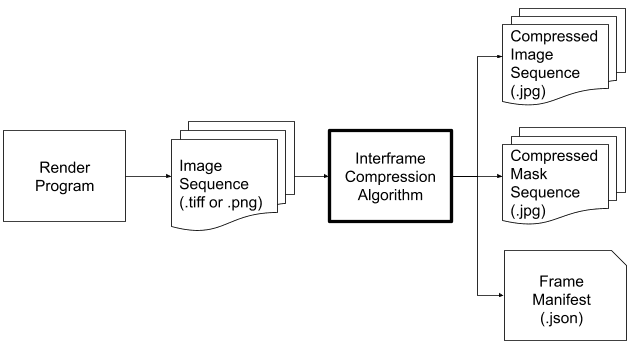

Asset Generation

On the asset generation side, it appears as if Apple have developed a Flow App / script to generate the compressed jpg sequences and manifests the code depends on to reconstruct the sequence. This app/ script works from a full quality render (likely in lossless .mov or a sequence of .tiff or pngs to support transparency) to limit the amount of times lossy compression is applied during the encoding process. Flow supports transparency masking through an additional sequence of jpgs (consisting of a black and white image sequence representing alpha) that is another significant image weight win and is part of what allows them to pack the textures so efficiently in the main sequence.

Fig: Asset Flow:

Asset Consumption

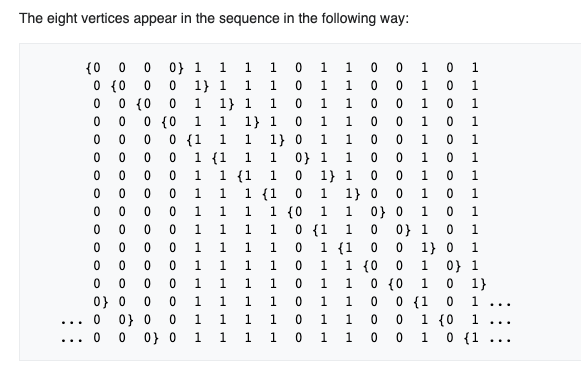

On the client, Apple is using a combination of BitSet math and deBruijn matrix lookup tables to reconstruct animation frames with keyframes, diff frames and manifest supplied upon initial page load; all in the pursuit of their goal to reduce the amount of duplicated frame data, and therefore overall page weight.

On some pages, this frame reconstruction is done in a worker so it doesn't block the main UI thread, but the technique is still somewhat CPU intensive and as such seems to be limited to more powerful devices. After a frame is created, it is loaded as textures into webgl based context shaders and fragment shaders(with this low level webgl library) and a separate script ties this into a global event system tracking the browser framerate (RAF) and user scroll. These Flow sequences are produced in small, medium and large sizes across breakpoints (though Apple seem to artificially limit Flow animations on mobile to Safari on iOS only) with corresponding manifest and images.

While the client is somewhat understood, the current weakness in understanding is on how exactly they generate the hash-maps/ manifests and diff-images on the asset side. This research is still ongoing and likely requires more effort/ resourcing.

The end result of Apple’s custom implementation is basically a fully custom graphics pipeline that uses lossless compressed images with a separate transparency mask and a manifest file, allowing the full animation to be efficiently re-created on the fly prior to playback. This keeps the transmitted data as low as possible and offloads as much of the work as possible into a separate thread / webGL, which reduces the additional scripting load the technique would otherwise require.

At the algorithms core, the main optimization is a sequencing process leveraging De Brujin packing to compress a sequence of like images by generating small de-duplicated and sequential blocks of data. This in turn generates a vertex matrix that is encoded into base64, placed in the flow manifest that is used to build the equivalent of a sprite map that is finally used to render the image onto the canvas/texture. This base understanding leads us to a few possible solutions to this problem.

Here's a high-level overview of what I believe each file does:

marcom-image-queue.js: This file defines a class that manages a queue of images. It has methods to enqueue images, start and stop the queue, and process the next item in the queue. It also has a method to load an image given its URL.

marcom-main-bitset.js: This file defines a BitSet class, which is a data structure that can efficiently store and manipulate sets of integers. It has methods for setting, unsetting, and toggling bits, performing bitwise operations, and other utility functions.

marcom-flow-worker.js: This file defines a FlowWorker class that processes a manifest of image frames. It has methods to parse frames and create a number from a base64 range. It also has a message handler for communication with a web worker.

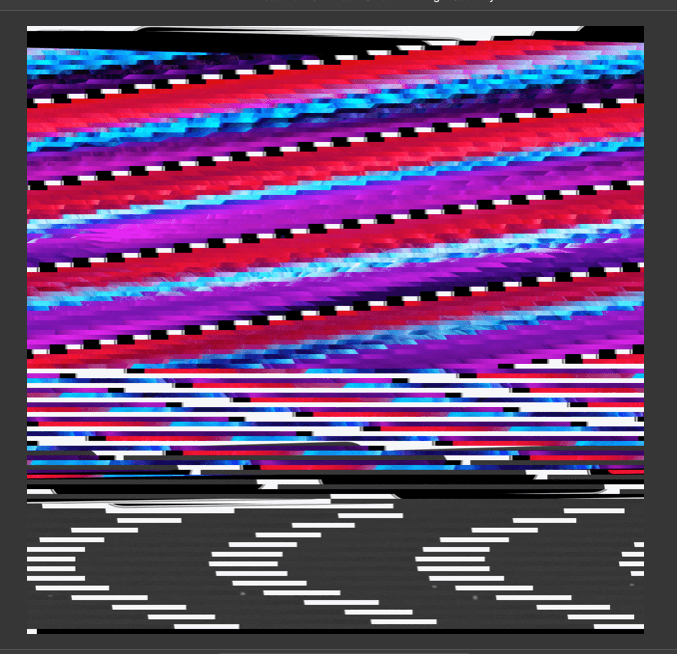

Identified Patterns

Fig: Debruijn patterns identified:

The compression scheme used here seems to be a combination of image quantization, Huffman coding, and possibly some form of delta encoding. Here's a high-level overview of each technique:

Image Quantization: This is a process that reduces the number of distinct colors used in an image, which can significantly reduce the image size without degrading the quality of the image too much.

Huffman Coding: This is a common algorithm used for lossless data compression. The idea is to assign shorter codes to more frequently occurring characters and longer codes to less frequently occurring characters.

Delta Encoding: This is a way of storing or transmitting data in the form of differences between sequential data rather than complete files. It is sometimes called delta compression, particularly where archival histories of changes are required.

Proposed Solutions

Unfortunately a temporal, diff-based texture packing approach for image sequences like the one Apple uses is completely undocumented in the web space. While it clearly took a significant amount of research for Apple to arrive at their current solution of Flow, we don't necessarily need to follow exactly the same approach. Apple has long been the clear leader in this approach on the web, but there is a large amount of research in this space (specifically for medical imagery/ DNA sequences) and a la so given enough time we can create and own our own approach. This new approach also has deep value across the web, so there may be merit in developing it in the open / open sourcing it so others can contribute ideas to further optimize the compression approach (much like AIrBnb has with Lottie for SVG).

Specifically, any method developed should likely revolve around versioned manifests as Flow does, so the approach can evolve in the future with new temporal image compression approaches. Any new method should also evaluate the performance gains of using more modern image formats like .webp and .avif for browsers that support it or potentially GPU compressed texture formats like Basis or KTX2.

Next Gen codecs

The advent of next-generation image formats like BPG and Daala presents exciting opportunities for enhancing the quality and efficiency of scroll-tied animations and image sequences. These formats utilize advanced compression algorithms, offering significant reductions in file size without compromising on visual quality.

BPG, or Better Portable Graphics, is based on the High Efficiency Video Coding (HEVC) video codec. It has demonstrated superior performance over traditional formats like JPEG (like Apple used for Flow), delivering smaller file sizes for similar visual quality. This could potentially allow for higher resolution animations without increasing page weight. Furthermore, BPG supports higher bit depths and color spaces, which could enhance the richness and vibrancy of animations along with transparency.

On the other hand, Daala is a video codec being developed by the Xiph.Org Foundation, the organization behind the Ogg and Opus formats. Daala aims to surpass the performance of High Efficiency Video Coding (HEVC) and VP9, and is designed to avoid patent encumbrances. This makes it a promising candidate for future web standards.

However, the adoption of these next-gen image formats is not without challenges. Patent encumbrances, compatibility issues, and the need for broad support across different platforms and devices are significant hurdles that need to be overcome. Despite these challenges, the potential of BPG and Daala in revolutionizing the way we approach scroll-tied animations and image sequences is undeniable.

Whats next?

In more recent releases it seems Apple has trended away from custom pages and more towards standardization in their page templates. This change has also come in conjunction with a large shift in how Apple does its product animations, now relying much more heavily on manipulating full 3D formats like GLTF and USDZ. This makes sense as they have been producing these files for their AR product preview feature for a number of years, so why not focus on improving the quality of these model until it met their standard for usage on the web. This along the advances in 3D rendering on the web including Mesh compression with Draco and other techniques I have covered more in depth in my article Performant 3D on the web have likely allowed them to rely on this approach for new sites like the recently launched Vision Pro site.

This begs the question: if Apple doesn't use/need Flow anymore, why spend time investigating it? Since I discovered Apple's use of this technique over those that I was already aware of it has sat in the back of my brain. Something like this should exist at least as an option in developers toolkits. Apple unfortunately is not in the habit of open-sourcing the secrets powering its marketing site, but I thought it was interesting to try uncover more about why and how they developed this previously unseen and not-yet-replicated animation approach.

Research References

- https://superuser.com/questions/1311850/compressing-many-similar-large-images

- http://mattmahoney.net/dc/#jpeg

- http://mattmahoney.net/dc/dce.html

- https://leiless.me/2017/07/01/lowest-bit-set.html

- https://www.chessprogramming.org/BitScan

- https://github.com/skeeto/igloojs

- https://patents.google.com/patent/WO2015176280A1/en

- http://graphics.stanford.edu/~seander/bithacks.html#ZerosOnRightMultLookup

- https://www.semanticscholar.org/paper/Bit-allocation-for-lossy-image-set-compression-Cheng-Lerner/778e731e714786000b5f94a68b5a854a7aa5cf82

- https://en.wikipedia.org/wiki/De_Bruijn_sequence

- https://github.com/mainroach/crabby

- Crabby Algorithm Paper

- https://en.wikipedia.org/wiki/De_Bruijn%27s_theorem

- https://hackage.haskell.org/package/bits-0.5/src/cbits/debruijn.c

- https://50linesofco.de/post/2017-02-13-bits-and-bytes-in-javascript

- https://pmelsted.wordpress.com/2013/11/23/naive-python-implementation-of-a-de-bruijn-graph/

- https://towardsdatascience.com/genome-assembly-using-de-bruijn-graphs-69570efcc270

- https://lottiefiles.com/blog/engineering/lottie-vs-raster-animation

- https://datagenetics.com/blog/october22013/index.html

- https://lwn.net/Articles/625535/